Diversity and inclusion: what you exclude

In recent years, the concepts of diversity and inclusion have become increasingly spoken about in the context of ELT. As a lexicographer working on learner’s dictionaries, I often find there’s less scope to put these ideas into practice than in other ELT materials – we don’t have illustrations or discussion topics or characters taking part in dialogues, etc. We do, of course, have to deal with vocabulary that relates to a whole range of topics – no PARNSIPS in dictionaries! - so sensitivity is required in how we deal with those entries. One area where I’m especially aware of who I include and how is in the selection of example sentences.

Dictionary examples and inclusion:

Each numbered sense of each entry in a learner’s dictionary has a number of example sentences to illustrate how the word or phrase is used. They aim to back up the definition in showing what the word means and also to exemplify how it’s most commonly used – its typical context(s), genre(s), collocations, grammatical forms and patterns, etc.

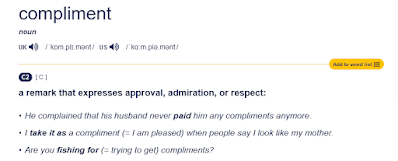

Dictionary examples are, by necessity, short and as a result, tend to lack context. That can make it difficult to show very much about the people or groups who get mentioned. A recent social media post highlighted the example below from the Cambridge Dictionary at the entry for “compliment” which does manage to use pronouns to subtly include a same-sex relationship.

|

| From: Cambridge Dictionary (click to enlarge) |

Besides indicating gender through pronouns, though, it’s actually quite difficult to include specific groups or characteristics in short, decontextualized example sentences. Characteristics such as race or disability are hard to show without becoming clunky and unnatural. I recently, for example, came across a really nice corpus example to illustrate a phrase that was about a non-verbal child, who may have been neurodiverse or had a learning disability. By the time I’d edited it down to fit within what was needed, however, that context had been rather lost, and I doubt that someone reading the example would have picked up on who it was about.

Holding a mirror up to society … and choosing to exclude:

What I’m perhaps more conscious of is what I leave out.

Scrolling through hundreds of corpus lines illustrating a particular word or

phrase is rather like holding a mirror up to society. And, to be honest, what

you find reflected back isn’t always what you’d like to see. Although the

corpora that publishers use to compile dictionaries are kept up to date, they

do, necessarily, also include texts going back over time and from a wide range

of sources.

One bias that I’ve been aware of since I first started out in lexicography some 25 years ago is the inherent sexism in language. Going back to those pronouns again, time and again I’ll sort a screenful of corpus lines and scroll down to find predominantly he/his or she/her coming up alongside a particular word or phrase. The stereotypes really jump out.

Now in some ways, stereotypes are a useful shortcut when you’re trying to get across an idea quickly and succinctly. For example, if I was trying to exemplify a verb such as drive or reverse or stall, it would make sense to use a car as the object of the verb, rather than, say, a juggernaut or ambulance or camper van. In an example sentence, we want to get the key idea across as simply as possible, keeping focused on the target vocab without unnecessary distractions or ambiguity.

Stereotypes involving people, however, are a different kettle of fish and at almost every word I deal with, I have to make myself stop and think about what I’m seeing in the corpus and whether I’m perpetuating unfair and unhelpful stereotypes. It’s relatively easy to make sure you get a gender balance where the word in question can quite reasonably be applied to any gender, and I’ve been putting in examples of female professors and footballers and male nurses and dancers for many years. Where things get trickier is where a word or phrase is (almost) always used about either men or women. As a descriptivist, I don’t want to distort language and give dictionary users an unrepresentative idea of how a word is actually used. That would be misleading and could potentially land a learner in hot water.

Two phrases I came across and hesitated over recently were “leaves nothing to the imagination” (to describe tight-fitting or skimpy clothes) and “brazen hussy”. A first look at the corpus showed both applied mainly to women and although most cites were fairly humorous and light-hearted, I felt both had uncomfortably sexist connotations. With a bit more digging, I found some examples of the first expression used about both men and women and I managed to come up with examples that weren’t overly stereotypical. Hussy, however, brazen or otherwise, is just used as an offensive term for a woman or girl, so it went the way of other offensive terms, clearly labelled as such and defined but receiving minimal treatment in terms of examples.

Gradual progress:

It's important to realize that dictionaries are huge and sprawling projects containing many thousands of entries and tens of thousands of example sentences, compiled and edited over decades. In many cases, dictionary departments have very limited budgets and although work continues to keep dictionary content up to date, the idea that a publisher could go through all the examples in a particular dictionary to check for DEI is just unfeasible, especially in the light of rapidly shifting norms. So, progress is slow. During any update, lexicographers will be on the look-out for examples that look dated or no longer feel acceptable. They make changes and tweaks where they can - the compliment example above was her not his in an earlier edition. They include a more diverse range of people and contexts where they can and exclude more examples of harmful and offensive stereotypes. But it's not an overnight fix, so bear with us.

Footnote 1: Brazen Hussy is also a type of flower, so named because its bright yellow flowers set against dark purple leaves stand out so brazenly when it blooms in early spring:

Footnote 2: AI doesn’t do this – it just takes the data it’s fed at face value and replicates the stereotypes and biases. Just saying …